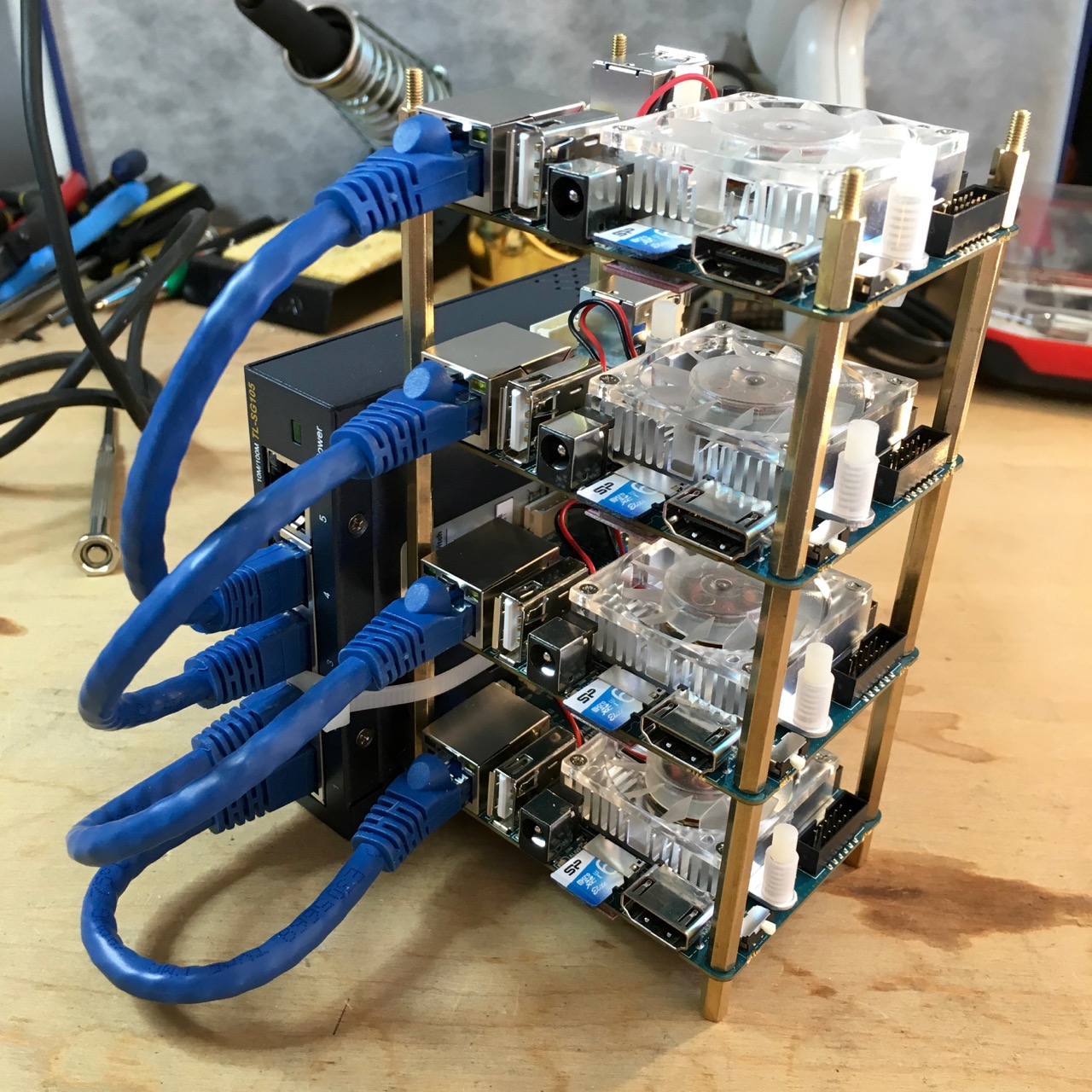

This project walks you through all the steps necessary to create a distributed compute cluster with four ODROID XU4 single board computers and then install everything needed to run Apache Spark to perform data analysis. Total project cost is less than US$600.

Building the Cluster

- Cluster and Network Design

- Hardware Selection

- Construction

- Operating System Set Up

- Configuring the DHCP and NAT Services

- Adding the MicroSD Data Drives

Installing Data Analysis Software

Latest Software Builds

These are the latest builds for the arm71 platform for each of the software packages listed below.

Apache Hadoop

Here we install Hadoop onto the cluster and demonstrate some of its usage.

- Installing Java

- Getting Hadoop for ARM processor

- Preparing Cluster

- Installing and Configuring Hadoop

- Starting HDFS

- Mounting HDFS vis NFS

- Running the Word Count job with Hadoop

- More to come …

Apache Spark

Apache Spark

Quantcast File System

The Quantcast File System (QFS) is a more efficient alternative to using HDFS to store data on the cluster.

Data Analysis Projects

- Apache web log parsing

- More to come …