NOTE – This article has been updated. It now assumes you have set up the cluster with Ubuntu 16.04, and it has the latest builds of QFS v1.2.1 and Spark v2.2.0.

I have been using the Quantcast File System (QFS) as my primary distributed file system on my ODROID XU4 cluster. Due to QFS’s low memory footprint, it works well with Spark, allowing me to assign as much of the ODROID XU4’s limited 2 GB RAM footprint to the Spark executor running on a node. Recently, QFS 1.2 was released. This version brings many features and updates, many not relevant to my ODROID cluster use case. However, the most notable updates relevant to the ODROID XU4 cluster include:

- Correct Spark’s ability to create a hive megastore on a new QFS instance (QFS-332)

- Improved error reporting in the QFS/HDFS shim

- HDFS shim for the Hadoop 2.7.2 API, which the latest versions of Spark use.

In this post, I will update the ODROID XU4 cluster to use QFS 1.2.1.

Install QFS 1.2

cd /opt sudo wget http://diybigdata.net/downloads/qfs/qfs-ubuntu-16.04.3-1.2.1-armv7l.tgz sudo tar xvzf qfs-ubuntu-16.04.3-1.2.1-armv7l.tgz sudo chown -R hduser:hadoop qfs-ubuntu-16.04.3-1.2.1-armv7l sudo rm /usr/local/qfs sudo ln -s /opt/qfs-ubuntu-16.04.3-1.2.1-armv7l /usr/local/qfs

Now I will copy the configuration and launch scripts from my original QFS installations

mkdir /usr/local/qfs/conf mkdir /usr/local/qfs/sbin cd git clone git@github.com:DIYBigData/odroid-xu4-cluster.git cp odroid-xu4-cluster/qfs/configuration/* /usr/local/qfs/conf/ cp odroid-xu4-cluster/qfs/sbin/* /usr/local/qfs/sbin/

And then push it out to the rest of the cluster:

cd /opt rsync -avxz qfs-ubuntu-16.04.3-1.2.1-armv7l/ root@slave1:/opt/qfs-ubuntu-16.04.3-1.2.1-armv7l rsync -avxz qfs-ubuntu-16.04.3-1.2.1-armv7l/ root@slave2:/opt/qfs-ubuntu-16.04.3-1.2.1-armv7l rsync -avxz qfs-ubuntu-16.04.3-1.2.1-armv7l/ root@slave3:/opt/qfs-ubuntu-16.04.3-1.2.1-armv7l parallel-ssh -i -h ~odroid/cluster/slaves.txt -l root "chown -R hduser:hadoop /opt/qfs-ubuntu-16.04.3-1.2.1-armv7l' parallel-ssh -i -h ~odroid/cluster/slaves.txt -l root "rm /usr/local/qfs" parallel-ssh -i -h ~odroid/cluster/slaves.txt -l root "ln -s /opt/qfs-ubuntu-16.04.3-1.2.1-armv7l /usr/local/qfs"

Now start up QFS:

/usr/local/qfs/sbin/start-qfs.sh

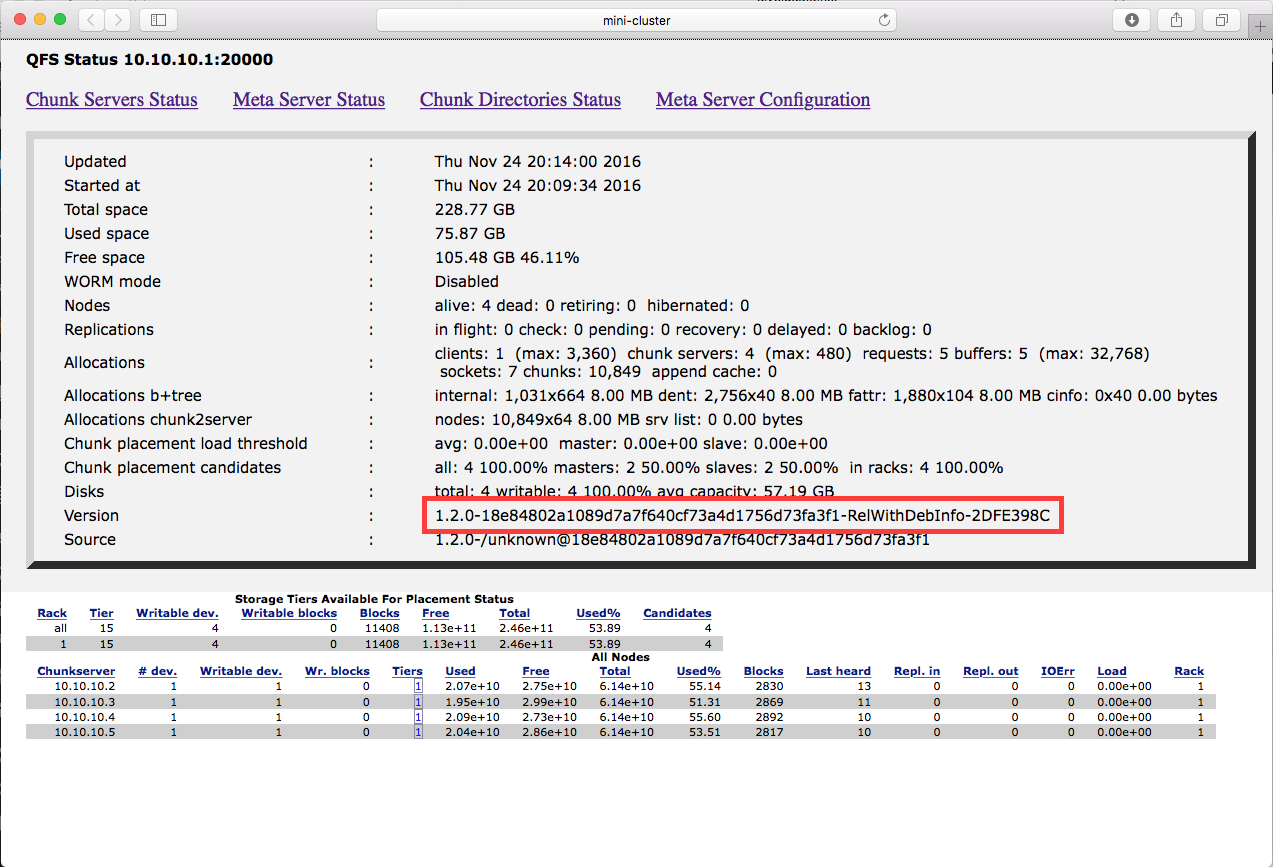

Point your computer’s web browser to the QFS monitor page at http://your-cluster-ip:20050, and you can verify that you now have QFS 1.2 running.

Now you can blow away the previous version of QFS.

Update Spark-QFS Connection

cd /usr/local/spark-qfs/conf vi spark-env.sh

Update the SPARK_DIST_CLASSPATH value to:

SPARK_DIST_CLASSPATH=/usr/local/qfs/lib/hadoop-2.7.2-qfs-1.2.1.jar:/usr/local/qfs/lib/qfs-access-1.2.1

Then push the configuration change out to the slaves and start Spark:

rsync -avxP /usr/local/spark-qfs/conf/ hduser@slave1:/usr/local/spark-qfs/conf rsync -avxP /usr/local/spark-qfs/conf/ hduser@slave2:/usr/local/spark-qfs/conf rsync -avxP /usr/local/spark-qfs/conf/ hduser@slave3:/usr/local/spark-qfs/conf rsync -avxP /usr/local/spark-qfs/conf/ hduser@slave4:/usr/local/spark-qfs/conf /usr/local/spark-qfs/sbin/start-all.sh

Launch the Jupyter notebook server and use your new file system with Spark.